Building Production-Ready RAG Systems with LangChain

Learn how to architect and deploy retrieval-augmented generation systems that scale. We cover vector databases, chunking strategies, prompt engineering best practices.

Introduction

Retrieval-Augmented Generation (RAG) has emerged as one of the most practical applications of large language models in enterprise settings. Unlike fine-tuning, RAG allows organizations to leverage their proprietary data without the computational overhead of model training.

The Architecture

A production RAG system consists of several key components:

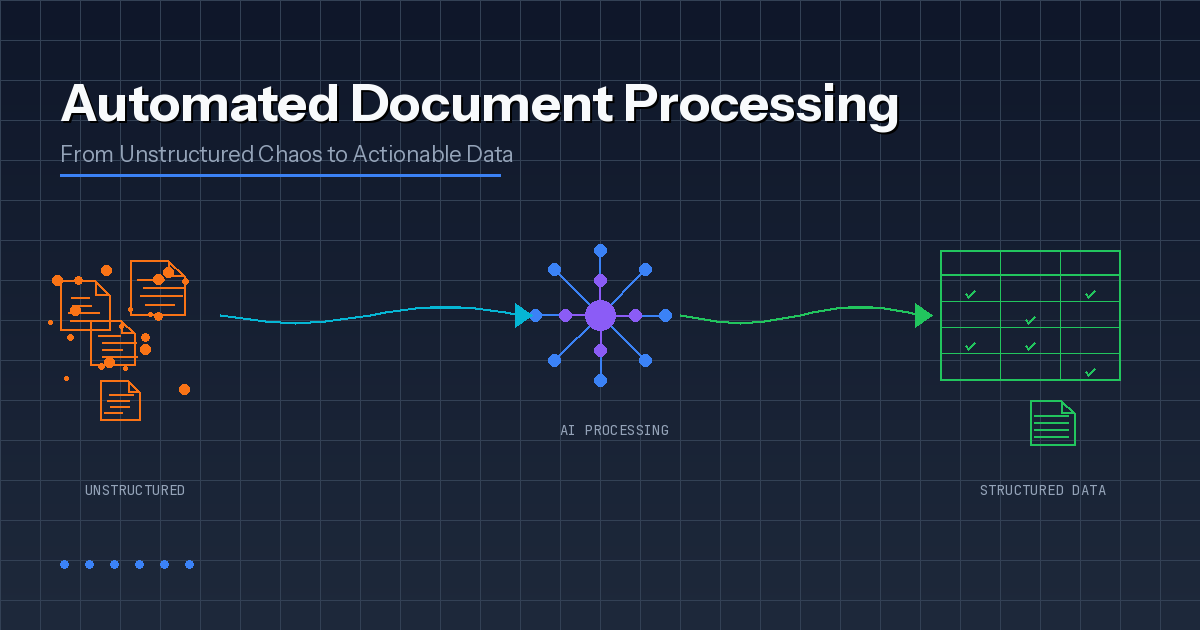

1. Document Processing Pipeline

Your documents need to be chunked intelligently. We recommend:

from langchain.text_splitter import RecursiveCharacterTextSplitter

splitter = RecursiveCharacterTextSplitter(

chunk_size=1000,

chunk_overlap=200,

separators=["\n\n", "\n", ". ", " "]

)

2. Vector Database Selection

Choose based on your scale:

| Database | Best For | Hosted Option |

|---|---|---|

| Pinecone | Enterprise scale | Yes |

| Weaviate | Hybrid search | Yes |

| Chroma | Prototyping | No |

| pgvector | PostgreSQL users | Via Supabase |

3. Retrieval Strategy

Simple similarity search often falls short. Consider:

Evaluation Metrics

Track these metrics in production:

Conclusion

Building production RAG systems requires careful attention to each component. Start simple, measure everything, and iterate based on real user feedback.